Investigation Finds AI Algorithms Objectify Women’s Bodies

Have you ever wondered how and why social media platforms determine what content you see—and what harm, unintentional or otherwise, these invisible systems might cause?

This is one of many questions the Pulitzer Center explores as we delve into technology’s human impact on our everyday lives through the AI Accountability Network.

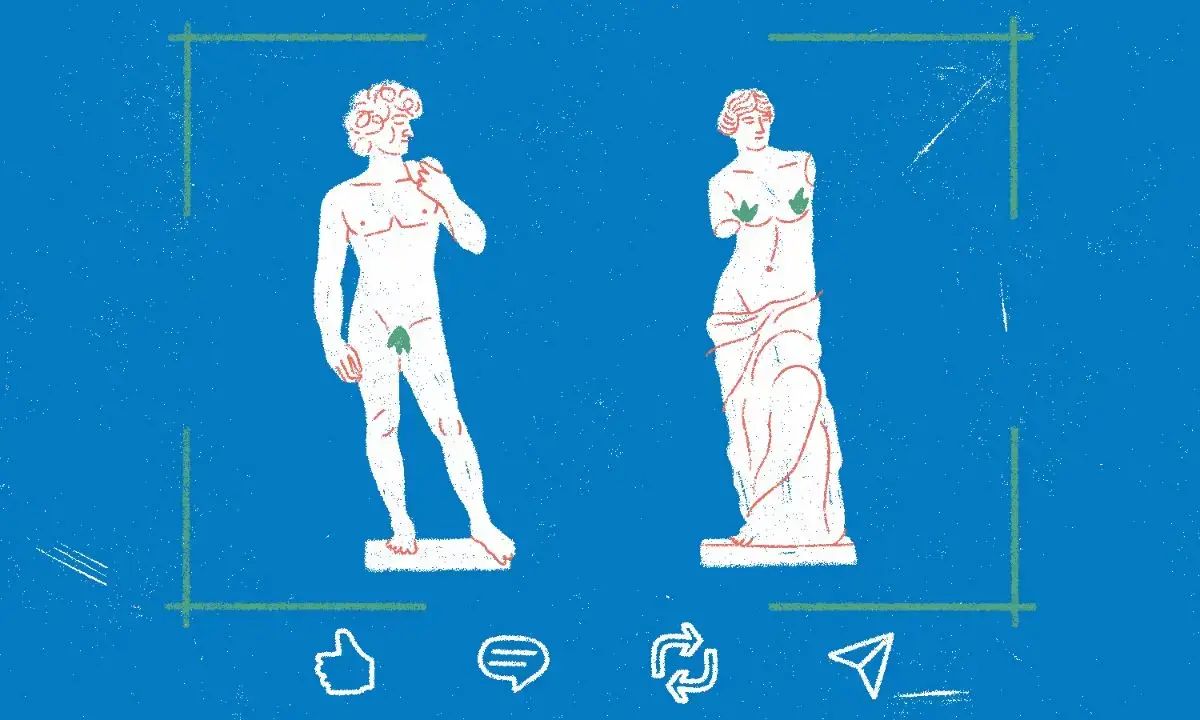

Most images uploaded to social media go through an artificial intelligence (AI) algorithm analysis meant to protect users from violent or pornographic visuals. The algorithm labels visuals and determines what is shown to users. Companies that create and sell these AI tools—including Google, Microsoft, and Amazon—claim that their methods are able to determine how sexually suggestive a visual is.

In a new investigation for The Guardian, AI Fellow and New York University Professor Hilke Schellmann and AI researcher Gianluca Mauro analyzed hundreds of images of men and women using these AI tools, and found that the tools rate photos of women in everyday situations as more sexually suggestive than those of men, especially if nipples, pregnant bellies, or exercise are involved.

Mauro even submitted himself to these image analyses as part of the experiment and found out that while being shirtless didn’t raise his “racy” score, wearing a bra raised his raciness score from 22% to 97%. The reporting is also accompanied by a quiz that shows the real (and shocking) ratings of photos of women in everyday situations as far more sexually suggestive than men in comparable images.

This is just the beginning of unpacking the real-world consequences of gender bias in these AI tools, which may be suppressing the visibility of countless photos featuring women’s bodies, including on Instagram and LinkedIn. The investigation is filled with stories of women being “shadowbanned” for opaque reasons; for many, this harms their businesses and livelihoods.

“Objectification of women seems deeply embedded in the system,” said Leon Derczynski, a professor of computer science at the IT University of Copenhagen, who specializes in online harm.

Read the investigation and its findings here. If you’re an educator looking to engage your students with this story, explore the lesson plan "Evaluating AI’s Impact on Everyday Life," featuring reporting from our AI Fellows and grantees from the Associated Press, The Dallas Morning News, MIT Technology Review, and more.

Best,

IMPACT

PBS NewsHour was honored at the duPont-Columbia University Awards for its Pulitzer Center-supported reporting on the war in Ukraine and its coverage of the U.S. withdrawal from Afghanistan.

Grantee Jane Ferguson led the coverage in Afghanistan. “Ferguson’s reporting highlighted the Afghan government’s dire situation as it struggled frantically to hold off the Taliban,” according to PBS NewsHour.

The awards also honored NewsHour for its Pulitzer Center-supported coverage of the war in Ukraine, reported by foreign affairs and defense correspondent Nick Schifrin and a team of journalists.

“NewsHour correspondents and producers brought the reality of major war in Europe home to viewers across the spectrum,” PBS said in a press release.

This message first appeared in the February 10, 2023, edition of the Pulitzer Center's weekly newsletter. Subscribe today.

Click here to read the full newsletter.